Demoing an LLM-based application

Some (opinion-laced) notes and tips about demoing LLM-based applications that I picked up while demoing Cody (Sourcegraph’s AI coding assistant).

- 90% of devs and eng leaders wish they got to work more with LLMs in their day job and are open-minded or downright passionate about (the potential of) LLMs.

- The other 10% of devs (LLM skeptics/deniers) have LLMs living rent-free in their head. They’ll be even more engaged, if they’re in the meeting, since they are skeptics and will try to poke holes in it. We have one of the most ideal kinds of LLM applications, so we’ll probably be the product that wins many of these people over. In due time.

- Always, always establish that Cody is a work in progress and will have magical moments and embarrassing moments.

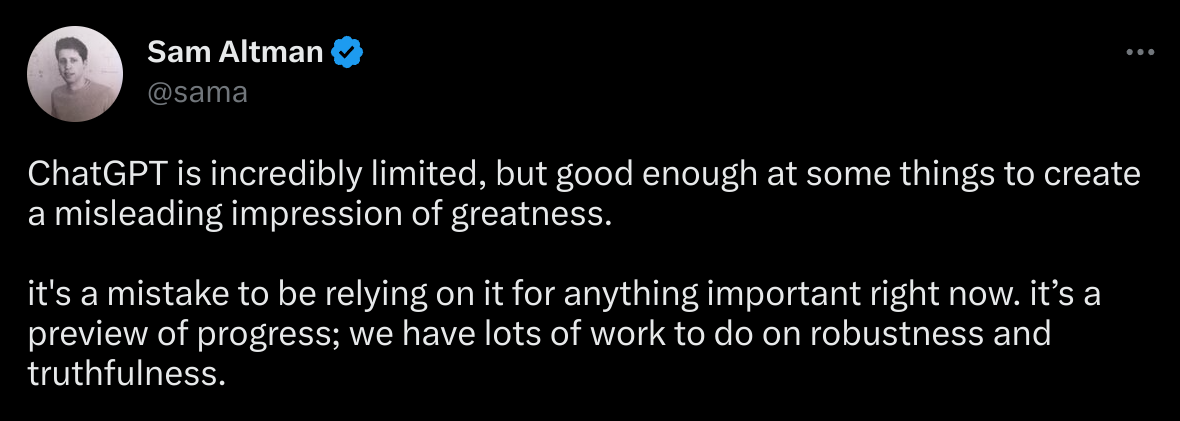

- Follow the example of Sam Altman (the OpenAI CEO):

- Set expectations low for Cody before you show it doing anything so that it always exceeds expectations.

- Even if it works perfectly, remind them it’s not actually perfect and that you are worried they might have gotten an overly positive impression of Cody based on the demo and that we’re working hard to improve it. (It’s up to your personal style.)

- If it all works perfectly, make a point to show a kind of question where it will not work well (telling them in advance, of course).

- Follow the example of Sam Altman (the OpenAI CEO):

- Always show a spontaneous demo path.

- Be sure to tell them that it’s spontaneous.

- Nothing is more suspicious than a pre-canned AI demo.

- You should have a sufficiently good mental model of LLMs and Cody so that you know what will work well and what won’t.

- Give an example of how you yourself used Cody.

- Cody is literally the easiest possible interface to use Sourcegraph, and it works for our handbook and docs as well.

- So, everyone on our team (including non-devs) should be able to use it and should be able to demo a recent (last ~2 days) example of how they used it. (Dogfooding might be painful, especially on bleeding-edge versions, but please share feedback and report thumbs-up/thumbs-down!)

- When relevant, prove whether Cody’s answer is correct!

- If it explains code, review the code it explained live and see if it got everything correct. Point out things it missed and things that were impressive.

- If it mentions a filename, open that file to prove it.

- If it generates code, try running the code (in a VS Code inline terminal) and seeing if the output is what’s expected!

- Use theatrics. AI is fun! And funny!

- On a recent trip to Japan, I would ask Cody to “translate into Japanese” some of its English responses, and then I’d say “Well, I don’t speak Japanese, so I have no clue what that says. But you do. Does that seem right?” It’s a flourish, but it worked!

- Another fun thing, depending on the audience, is to ask Cody to “now explain it in the style of an Italian chef who is annoyed that you’re interrupting his pasta-making” or other things like that.

- These theatrics show that AI is flexible and general-purpose.

- Ask for audience participation!

- This helps prove that it actually works and that it’s not just a slick AI demo.

- And if they suggest asking a question you know Cody won’t handle well, redirect it to a similar question that it will answer well. And then follow up and explain why Cody wouldn’t have handled their initial question well, and then show it failing on that question.

- Type questions casually and (depending on your style) in lowercase, and don’t bother correcting typos.

- Cody generally ignores typos and understands what you mean. If you make a typo and it still works, mention this and point out that it’s smart and you can just quickly type stuff out and don’t need to be perfect.